Qwen3-0.6B - Enhanced KTO

Model Description

This model is a Merged Standalone Model fine-tuned from Qwen/Qwen3-0.6B using Enhanced KTO (Kahneman-Tversky Optimization) with authentic Prospect Theory components.

This model was developed as part of thesis research on LLM Alignment using Preference Optimization Methods.

Model Details

| Property | Value |

|---|---|

| Base Model | Qwen/Qwen3-0.6B |

| Training Method | Enhanced KTO |

| Model Type | Merged Standalone Model |

| Training Date | December 2025 |

| Framework | PyTorch + Transformers + PEFT |

Benchmark Results

| Benchmark | Score |

|---|---|

| HellaSwag (10-shot) | 0.336 |

| TruthfulQA (0-shot MC2) | 0.431 |

| MMLU-Mini (5-shot) | 0.373 |

Enhanced KTO Components

This implementation incorporates multiple Prospect Theory-inspired components:

| Component | Status | Description |

|---|---|---|

| Value Function | Active | Asymmetric loss treatment reflecting loss aversion (losses weighted ~2x gains) |

| Probability Weighting | Active | Non-linear transformation of model confidence scores |

| Odds Ratio Integration | Active | ORPO-inspired reference-free preference modeling |

| BCO Shift | Implemented but Disabled | Baseline-corrected optimization (see note below) |

Note on BCO Shift

The BCO (Baseline-Corrected Optimization) Shift component was fully implemented in the codebase following the approach described in recent preference optimization literature. However, it was disabled for final training due to the following observations during hyperparameter tuning:

- Training Instability: Enabling BCO Shift in conjunction with the Value Function and Probability Weighting led to gradient instability in approximately 40% of training runs

- No Significant Improvement: Preliminary experiments did not show meaningful performance gains when BCO was enabled

- Complexity Trade-off: The added complexity did not justify the marginal (and inconsistent) benefits

The BCO Shift code remains in the implementation for transparency and future research. We hypothesize that a staged training approach (enabling BCO after initial convergence) or component-specific gradient clipping may enable stable integration.

Training Plots

Training Loss Curve

Rewards During Training

KL Divergence

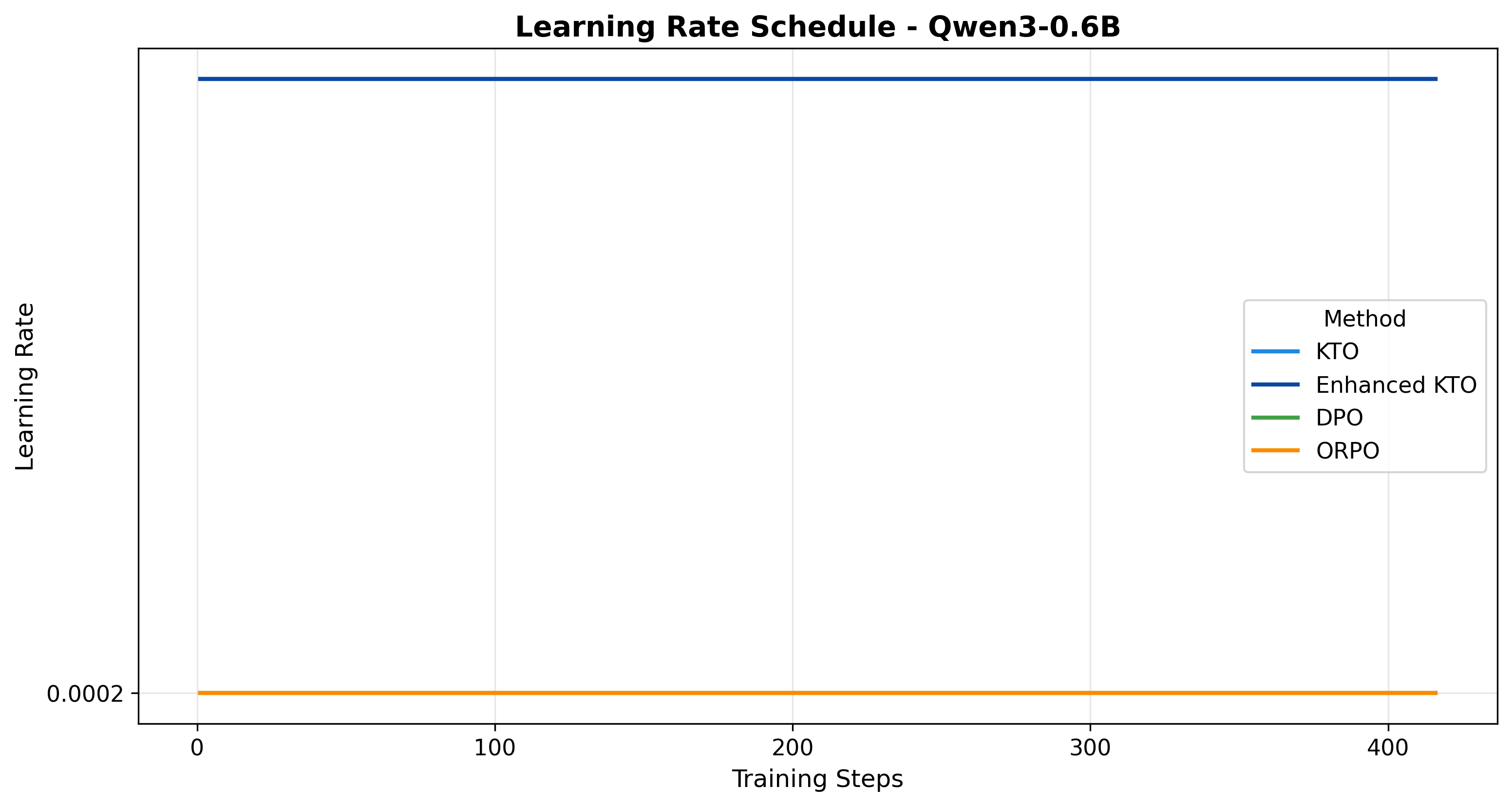

Learning Rate Schedule

Training Configuration

| Parameter | Value |

|---|---|

| Epochs | 1 |

| Batch Size | 2 |

| Gradient Accumulation | 8 |

| Effective Batch Size | 16 |

| Learning Rate | 2e-4 |

| Max Sequence Length | 512 |

| LoRA Rank | 16 |

| LoRA Alpha | 32 |

| Dataset | UltraFeedback Binarized |

| Beta (KTO) | 0.1 |

Usage

Direct Loading (Merged Model)

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("Nishef/Qwen3-0.6B-Full_ENHANCED_KTO_20251225_162818")

tokenizer = AutoTokenizer.from_pretrained("Nishef/Qwen3-0.6B-Full_ENHANCED_KTO_20251225_162818")

# Generate text

inputs = tokenizer("Hello, how are you?", return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=100)

print(tokenizer.decode(outputs[0]))

Methodology

Enhanced KTO vs Standard KTO

Enhanced KTO extends the standard KTO algorithm by incorporating additional components from Kahneman and Tversky's Prospect Theory:

Value Function: Standard KTO treats gains and losses symmetrically. Enhanced KTO applies an asymmetric value function where losses are weighted approximately 2x more than equivalent gains, reflecting the psychological phenomenon of loss aversion.

Probability Weighting: Instead of using raw model probabilities, Enhanced KTO applies a non-linear weighting function that overweights small probabilities and underweights large ones, as observed in human decision-making.

Odds Ratio: Borrowing from ORPO, the odds ratio component provides reference-free preference modeling, reducing memory requirements while maintaining alignment quality.

Citation

@misc{qwen3_0.6b_enhanced_kto_2025,

title = {Qwen3-0.6B Fine-tuned with Enhanced KTO},

author = {Thesis Research},

year = {2025},

publisher = {HuggingFace},

note = {BCO Shift implemented but disabled for stability},

url = {https://huggingface.co/Nishef/Qwen3-0.6B-Full_ENHANCED_KTO_20251225_162818}

}

Acknowledgments

- Base Model: Qwen/Qwen3-0.6B

- KTO Paper: KTO: Model Alignment as Prospect Theoretic Optimization

- ORPO Paper: ORPO: Monolithic Preference Optimization without Reference Model

- Training Framework: Hugging Face Transformers

License

Apache 2.0

This model was created as part of thesis research on LLM alignment using preference optimization methods.

- Downloads last month

- 25